MBZUAI Nexus Speaker Series

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

March 10, 2026 — MBZUAI European AI Forum

|

Where AI’s brightest minds connect to exchange ideas, challenge perspectives, and shape the future of artificial intelligence.

|

Nexus Events

|

Academic Calendar Events

|

|

AI Speaker Series

|

Distinguished Lecture Series

|

Philip H. Knight Professor of Economics and Dean, Emeritus, at Stanford Graduate School of Business

Lecture Halls 1 & 2

3rd Floor, Building 1B, MBZUAI

This session examines the development and scaling of large-scale AI models, with attention to the underlying infrastructure and economic dynamics that support them, including data centres, electricity demand, investment trends, market valuations, and the risk of speculative bubbles. It then turns to the real-world impacts of AI, considering multiple dimensions and the available evidence of progress across key domains. These include health and biomedical science, economic performance, productivity and growth, challenges of diffusion and adoption, inclusive growth, education, and implications for security and defense. The discussion also addresses labour market effects, with particular focus on the balance between automation and human–machine collaboration, as well as cross-country survey evidence on public attitudes toward AI. Where possible, estimates of the timing and sequencing of impacts across these dimensions will be explored. If time permits, recent advances in robotics will also be discussed.

Michael Spence is a senior fellow at the Hoover Institution and Philip H. Knight Professor and dean, emeritus, at Stanford Graduate School of Business. He is the chairman of an independent Commission on Growth and Development, created in 2006 and focused on growth and poverty reduction in developing countries. He studied at Yale University, the University of Oxford and Harvard University, earning a Ph.D. in economics in 1972. He taught at Harvard and at Stanford University, serving, as dean of the latter’s business school in the 1990s. In 2001, he was awarded the Nobel Memorial Prize in Economic Sciences for his contributions to the analysis of markets with asymmetric information. He received the John Bates Clark Medal of the American Economic Association awarded to economists under 40. Through his research on markets with asymmetric information, Michael Spence developed the theory of “signaling” to show how better-informed individuals in the market communicate their information to the less well informed to avoid the problems with adverse selection. His own research emphasized education as a productivity signal in job markets, while subsequent research has suggested many other implications, e.g., how firms may use dividends to signal their profitability to agents in the stock market. He has served as member of the boards of directors of General Mills, Siebel Systems, Nike, and Exult, and a number of private companies. From 1991 to 1997, he was chairman of the National Research Council Board on Science, Technology, and Economic Policy. Among his many honors, Spence was elected a fellow of the American Academy of Arts and Sciences in 1983 and was awarded the David A. Wells Prize for outstanding doctoral dissertation at Harvard University in 1971. He is a member of the American Economic Association and a fellow of the American Acadamy of Arts and Sciences and the Econometric Society.

Emeritus Professor of Oncological Imaging at the University of Oxford

Distinguished Professor in Computer Vision and former Member of the Board of Trustees at MBZUAI

Executive Theater, Knowledge Center, MBZUAI

Show on MapProfessor Sir Michael Brady is CEO of the Oxford Community Diagnostic Centre, and is Emeritus Professor of Oncological Imaging at the University of Oxford having retired his Professorship in Information Engineering (1985-2010). He is also Distinguished Professor in Computer Vision and a former Member of the Board of Trustees at the Mohamed bin Zayed University of Artificial Intelligence in Abu Dhabi. Prior to Oxford, he was Senior Research Scientist in the Artificial Intelligence Laboratory at MIT, where he was one of the founders of the Robotics Laboratory. Mike is the author of over 400 articles and 40 patents in computer vision, robotics, medical image analysis, and artificial intelligence, and the author or editor of ten books. He was Editor of the Artificial Intelligence Journal (1987-2002), and founding Editor of the International Journal of Robotics Research (1981-2000). Mike was co-Director of the Oxford Cancer Imaging Centre, one of four national cancer imaging centres in the UK.

Mike has been elected a Fellow of the Royal Society, Fellow of the Royal Academy of Engineering, Membre Associé Etranger of the Académie des Sciences, Honorary Fellow of the Institution of Engineering and Technology, Fellow of the Institute of Physics, Fellow of the Academy of Medical Sciences, and Fellow of the British Computer Society. He was awarded the IEE Faraday Medal for 2000, the IEEE Third Millennium Medal for the UK, the Henry Dale Prize (for “outstanding work on a biological topic by means of an original multidisciplinary approach”) by the Royal Institution in 2005, and the Whittle Medal by the Royal Academy of Engineering 2010.

Mike was knighted in the New Year’s honours list for 2003.

Fortinet Founders Professor of Materials Science and Engineering at Stanford University

Lecture Halls 1 & 2

3rd Floor, Building 1B, MBZUAI

Yi Cui is the Founding Faculty Director of Stanford Sustainability Accelerator, previous Director of the Precourt Institute for Energy, Fortinet Founders Professor of Materials Science and Engineering, Energy Science and Engineering at Stanford University. He earned his bachelor’s degree in chemistry in 1998 from the University of Science & Technology of China and his PhD in chemistry from Harvard University in 2002. He was a Miller Postdoctoral Fellow at the University of California, Berkeley from 2002 to 2005. He joined in the Stanford faculty in 2005.

A preeminent researcher of nanotechnologies for better batteries and other sustainability materials technologies, Cui has published more than 600 papers and is one of the world’s most cited scientists with H-index 298. In 2014 he was ranked NO.1 worldwide in Materials Science by Thomas Reuters. He served as an associate editor and executive editor of Nano Letters for more than a decade. He is a co-director of the Battery 500 Consortium, Bay Area Photovoltaic Consortium, Stanford StorageX Initiative. He is the Director of Aqueous Battery Consortium (a $62.5M energy innovation hub funded by US Department of Energy).

He has founded six companies to commercialize technologies from his lab: Amprius Inc. (listed in NYSE: AMPX), 4C Air Inc., EEnotech Inc., LifeLabs Design Inc., EnerVenue Inc. and Zero Inc.

Cui is an elected member of the US National Academy of Sciences, an elected foreign member of Chinese Academy of Sciences, fellow of the American Association for the Advancement of Science, fellow of the Materials Research Society, fellow of the Electrochemical Society, and fellow of the Royal Society of Chemistry. His selected honors include Global Energy Prize (2021), Ernest Orlando Lawrence Award (2021), Materials Research Society Medal (2020), Electrochemical Society Battery Technology Award (2019) and Blavatnik National Laureate (2017).

Emeritus Professor of Oncological Imaging at the University of Oxford and Distinguished Professor in Computer Vision and former Member of the Board of Trustees at MBZUAI

Lecture Halls 1 & 2

3rd Floor, Building 1B, MBZUAI

With reference to two huge and growing global healthcare problems – cancer and the metabolic syndrome – I outline work we have done taking medical image analysis and AI to routine clinical use.

Professor Sir Michael Brady is CEO of the Oxford Community Diagnostic Centre, and is Emeritus Professor of Oncological Imaging at the University of Oxford having retired his Professorship in Information Engineering (1985-2010). He is also Distinguished Professor in Computer Vision and a former Member of the Board of Trustees at the Mohamed bin Zayed University of Artificial Intelligence in Abu Dhabi. Prior to Oxford, he was Senior Research Scientist in the Artificial Intelligence Laboratory at MIT, where he was one of the founders of the Robotics Laboratory. Mike is the author of over 400 articles and 40 patents in computer vision, robotics, medical image analysis, and artificial intelligence, and the author or editor of ten books. He was Editor of the Artificial Intelligence Journal (1987-2002), and founding Editor of the International Journal of Robotics Research (1981-2000). Mike was co-Director of the Oxford Cancer Imaging Centre, one of four national cancer imaging centres in the UK. Mike has been elected a Fellow of the Royal Society, Fellow of the Royal Academy of Engineering, Membre Associé Etranger of the Académie des Sciences, Honorary Fellow of the Institution of Engineering and Technology, Fellow of the Institute of Physics, Fellow of the Academy of Medical Sciences, and Fellow of the British Computer Society. He was awarded the IEE Faraday Medal for 2000, the IEEE Third Millennium Medal for the UK, the Henry Dale Prize (for “outstanding work on a biological topic by means of an original multidisciplinary approach”) by the Royal Institution in 2005, and the Whittle Medal by the Royal Academy of Engineering 2010. Mike was knighted in the New Year’s honours list for 2003.

Lecture Halls 1 & 2

3rd Floor, Building 1B, MBZUAI

World Model, the supposed algorithmic surrogate of the real-world environment which biological agents experience with and act upon, has been an emerging topic in recent years because of the rising needs to develop virtual agents with artificial (general) intelligence. There has been much debate on what a world model really is, how to build it, how to use it, and how to evaluate it. In this talk, starting from the imagination in the famed Sci-Fi classic Dune, and drawing inspiration from the concept of “hypothetical thinking” in psychology literature, we offer critiques of several schools of thoughts on world modeling, and argue the primary goal of a world model to be simulating all actionable possibilities of the real world for purposeful reasoning and acting. Building on the critiques, we propose a new architecture for a general purpose world model, based on hierarchical, multi-level, and mixed continuous/discrete representations, and a generative and self-supervised learning framework, with an outlook of a Physical, Agentic, and Nested (PAN) AGI system enabled by such a model.

Click here to view speaker's biography.

Professor in the Department of Electrical Engineering and Computer Science at UC Berkeley

Executive Theater, Knowledge Center, MBZUAI

Show on Map

Recent advancements in AI and LLM agents have unlocked powerful new capabilities across a wide range of applications. However, these advancements also bring significant risks that must be addressed. In this talk, I will explore the various risks associated with building and deploying Agentic AI and discuss approaches to mitigate them. I will also examine how frontier AI and LLM agents could be misused, particularly in cyber security attacks, and how they may reshape the cyber security landscape. Ensuring a safe AI future demands a sociotechnical approach. I will outline our recent proposal for a science- and evidence-based AI policy, highlighting key priorities to deepen our understanding of AI risks, develop effective mitigation approaches, and guide the development of robust AI policies.

Prof. Song’s research interest lies in deep learning and security. She has studied diverse security and privacy issues in computer systems and networks, including areas ranging from software security, networking security, database security, distributed systems security, applied cryptography, to the intersection of machine learning and security. She is the recipient of various awards including the MacArthur Fellowship, the Guggenheim Fellowship, the NSF CAREER Award, the Alfred P. Sloan Research Fellowship, the MIT Technology Review TR-35 Award, the George Tallman Ladd Research Award, the Okawa Foundation Research Award, the Li Ka Shing Foundation Women in Science Distinguished Lecture Series Award, the Faculty Research Award from IBM, Google and other major tech companies, and Best Paper Awards from top conferences. She obtained her Ph.D. degree from UC Berkeley. Prior to joining UC Berkeley as a faculty, she was an Assistant Professor at Carnegie Mellon University from 2002 to 2007.

Professor of Electrical Engineering and Computer Science and Director of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT

Executive Theater, Knowledge Center, MBZUAI

Show on Map

Artificial intelligence is leaving the cloud and entering the world, not as abstract code, but as a property of physical systems themselves. This is the promise of Physical AI: intelligence that is compact, adaptive, and embodied, inspired by the dynamics of living systems. Such AI could make our technologies more efficient, trustworthy, and human-centered, but it also forces us to confront profound questions. What does it mean when intelligence no longer sits apart from the world, but is woven into its fabric? Will Physical AI become a foundation for resilience and care, or will it bind us to technologies we cannot escape or control?

Physical Intelligence is achieved when AI’s power to understand text, images, signals, and other information is used to make physical machines such as robots intelligent. However, a critical challenge remains: balancing AI’s capabilities with sustainable energy usage. To achieve effective physical intelligence, we need energy-efficient AI systems that can run reliably on robots, sensors, and other edge devices. In this talk I will discuss the energy challenges of foundational AI models, I will introduce several state space models and explain how they achieve energy efficiency, and I will talk about how state space models enable physical intelligence.

Daniela Rus is the MIT Panasonic professor of Computer Science and Director of the Computer Science and Artificial Intelligence Laboratory (CSAIL) at MIT. Prof. Rus's research interests are in robotics and artificial intelligence. The key focus of her research is to develop the science and engineering of autonomy and intelligence. Prof. Rus served as a member of the President’s Council of Advisors on Science and Technology (PCAST), the Defense Innovation Board, and as a USA expert for Global Partnerships in AI. She is a senior visiting fellow at MITRE Corporation. She currently serves on the board of directors of Symbotic, SymphonyAI, and Mass Robotics, as well as on the Board of Trustees for MBZUAI. She is the co-founder and board member of LiquidAI, ThemisAI, and Venti Technologies. Prof. Rus is a MacArthur Fellow, a fellow of ACM, IEEE, AAAI and AAAS, a member of the National Academy of Engineering, National Academy of Sciences, and of the American Academy of Arts and Sciences. She is the recipient of the Engelberger Award for robotics, the John Scott medal, the IEEE Edison Medal, IEEE Robotics and Automation technical award, and the IJCAI John McCarthy Award. She earned her PhD in Computer Science from Cornell University. Prof. Rus aspires to help build a world where robotics and AI systems help with people with physical and cognitive work, accelerate scientific discovery, and enable solutions to the grand challenges facing humanity. She is the co-author of the books The Heart and The Chip: Our Bright Future with Robots and The Mind’s Mirror : Risk and Reward in the Age of AI.

MBZUAI hosted the inaugural Natural Language Processing Symposium under the AI Quorum series. This workshop featured a series of presentations by leading experts in NLP and culminated with a Panel Discussion on the topic ‘Where are we at, where should we be, and how do we get there?’

Images of the event will be available soon.

Images of the event will be available soon.

Images of the event will be available soon.

Images of the event will be available soon.

Prof. Eran Segal, Chair of MBZUAI’s Computational Biology Department will host a symposium with internationally renowned researchers about the future of Computational Biology and how these align with global public health priorities. The symposium will cover diverse areas, including Personalized Medicine, Foundation AI Models for Biology, Multi-omics and Integrative Data Analysis, Population-level Cohort and EHR Analysis, Epidemiology, and Biomedical Imaging. We invite you to register at the link below to attend the talks and hear about cutting edge research in the field.

Images of the event will be available soon.

Prof. Yoshihiko Nakamura, Chair of MBZUAI’s Robotics Department has assembled a group of highly motivated young scholars and will host a symposium about the future of AI in Robotics.

Images of the event will be available soon.

A snapshot into MBZUAI’s plans for the fast-growing HCI department. Prof. Elizabeth Churchill, Professor and Department Chair of HCI at MBZUAI shared her ideas about key focus areas for the new department and entertained discussion with interested parties about collaboration opportunities.

Images of the event will be available soon.

Images of the event will be available soon.

The MBZUAI Single Cell Summer Workshop is a capacity building event focused on supporting the Abu Dhabi region bioinformatics community in getting to know each other and acquiring practical skills for working with single cell omics data. This is a hands on workshop aiming to introduce participants to tools from the scverse ecosystem of Python single cell data analysis tools, and in particular the scvi-tools framework for probabilistic modelling of single cell omics data.

Participants should bring a laptop.

Friday activities will require familiarity with the command line.

The workshop is free to participate.

Note: If you would like to give a 20 minute participant talk, we still have a few slots available, please indicate when filling in the registration form and contact Eduardo at eduardo.beltrame@mbzuai.ac.ae.

Images of the event will be available soon.

The MBZUAI Silicon Valley AI Forum was an opportunity to bring together like-minded AI specialist to meet and discuss the future of AI. The event was held at the iconic Computer History Museum in Mountain View, CA.

MBZUAI Provost, Tim Baldwin opened the proceedings with an overview of how the world’s first Artificial Intelligence university, MBZUAI has been taking a leading role in developing research and education programs in a range of AI-related areas.

The event, which hosted 110 AI specialists from a range of institutions on the US West Coast then featured three panel discussions focusing on:

As AI driven solutions are increasingly addressing global grand challenges, events such as the MBZUAI AI Forum facilitate the open exchange of views in a relaxed setting leading to constructive discussion and new ideas, all in the inspirational setting of the Computer History Museum!

MBZUAI is proud to unveil the Institute of Foundation Models (IFM) — a bold, global initiative uniting top AI talent across Abu Dhabi, Silicon Valley, and Paris. The mission: to advance the next generation of foundation models and deliver their benefits to communities worldwide.

We invited researchers, technologists, and innovators to join us in Silicon Valley as we celebrated this milestone and fostered connections within the broader AI community.

Images of the event will be available soon.

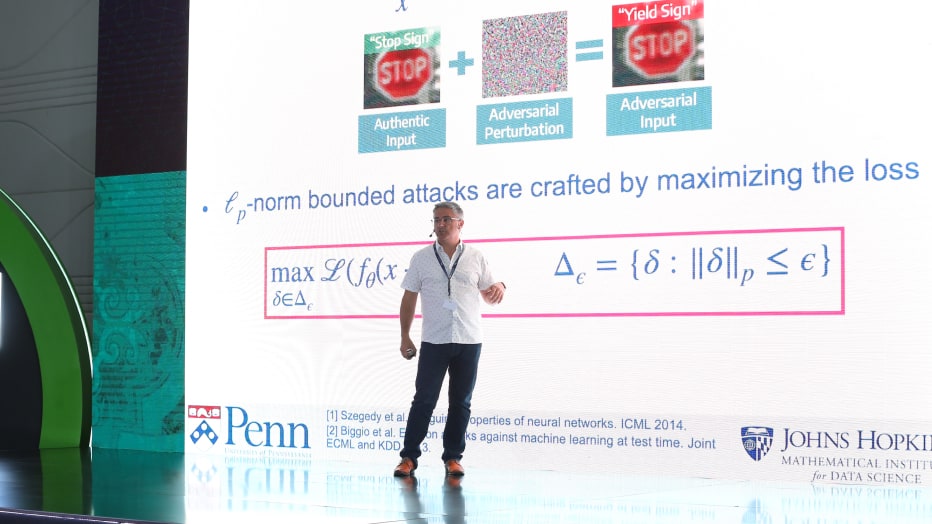

As foundation models become increasingly integrated into critical applications, ensuring their trustworthiness is paramount. The International Symposium on Trustworthy Foundation Models brought together researchers, industry leaders, and policymakers to discuss key challenges and advancements in building safe, fair, and robust AI systems. This symposium explored topics such as model safety, privacy, fairness, interpretability, causality, and robustness, with a particular emphasis on real-world deployment and governance. Through two days of invited talks, networking discussions, and contributed research, we sought to foster collaboration and drive innovation in the responsible development of foundation models.

Specifically, the objectives of the symposium were:

• Exchanging ideas, e.g., keynote speeches from world-leading researchers and contributed talks from active researchers;

• Building networks and promoting potential collaborations, e.g., promote internal collaborations within MBZUAI and internal collaborations with rising-star and world-leading researchers;

• Inspiring the juniors to do better research, e.g., mentor research students and postdocs at MBZUAI to do high-quality research.

Organizing committee:

General chair: Kun Zhang, MBZUAI; Tongliang Liu, MBZUAI/USYD

Program chair: Bo Han, HKBU/RIKEN; Bo Li, UIUC

Invited faculty talk session chair: Nils Lukas, MBZUAI

Rising-star presentation session chair: Salem Lahlou, MBZUAI

PhD mentoring session chair: Mingming Gong, MBZUAI/UoM

Local arrangement chair: Runqi Lin, MBZUAI/USYD

Local hosts:

Maria Pereira, MBZUAI

Analyne Mata, MBZUAI

Images of the event will be available soon.

The MBZUAI East Coast AI Forum was an opportunity to bring together like-minded AI specialist to meet and discuss the future of AI.

MBZUAI Provost, Tim Baldwin opened the proceedings with an overview of how the world’s first Artificial Intelligence university, MBZUAI has been taking a leading role in developing research and education programs in a range of AI-related areas.

The event, which hosted over 130 AI specialists from a range of institutions on the US East Coast then featured three panel discussions focusing on:

As AI driven solutions are increasingly addressing global grand challenges, events such as the MBZUAI AI Forum facilitate the open exchange of views in a relaxed setting leading to constructive discussion, new ideas, and the occasional controversy!

MBZUAI is excited to participate in the 2025 International Conference on Machine Learning (ICML), a leading global forum for machine learning research. We’ll be hosting a special reception during the conference at the Vancouver Convention Centre. We look forward to connecting with the community and engaging with fellow researchers and professionals there!

Images of the event will be available soon.

The annual international conference on Intelligent Systems for Molecular Biology (ISMB) is the flagship meeting of the International Society for Computational Biology (ISCB). The 2025 meeting is the 33rd ISMB conference, which has grown to become the world’s largest bioinformatics and computational biology conference. Joining forces with the European Conference on Computational Biology (the 24th Annual Conference), ISMB/ECCB 2025 will be the year’s most important computational biology event!

Visit our booth at ISMB 2025 to explore how MBZUAI is advancing AI-driven research. Meet our team, learn about our work, and discover opportunities to collaborate or join us.

Images of the event will be available soon.

MBZUAI is thrilled to be attending the 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025) and will be hosting a special reception during the conference. The exact location of the reception will be announced soon. We look forward to connecting with the community and seeing everyone there!

Images of the event will be available soon.

MBZUAI is proud to participate in the 2025 Joint Statistical Meetings (JSM), the North American conference bringing together the statistics and data science community. While the main conference will be held at the Music City Center, our special reception will take place at the Omni Nashville Hotel, 250 Rep. John Lewis Way S, Nashville, TN 37203. We look forward to connecting with fellow researchers and professionals there!

Images of the event will be available soon.

MBZUAI is proud to take part in Interspeech 2025, the world’s largest and most comprehensive conference on the science and technology of spoken language processing. We’ll be hosting a special reception during the event and look forward to connecting with the speech and language research community from around the world.

Images of the event will be available soon.

We’re hiring!

Images of the event will be available soon.

Director for Center of Teaching and Learning and Assistant Professor of Natural Language Processing

MBZUAI SYMPOSIUM ON TEACHING AND LEARNING

This symposium explores practices of teaching and learning in higher education, and how artificial intelligence gives us the opportunity to innovate and rethink. Through case studies, research insights, and institutional practices, speakers will examine the fundamentals of student learning and faculty engagement, and how AI challenges and invigorates models of instruction, assessment, and academic integrity. Topics include competency-based education, authentic learning design, team-based learning, and the evolving role of faculty. A panel discussion will invite critical dialogue amongst speakers and attendees on the central need to preserve the humanity of learning within the context of technological innovation. Bringing together educators from local and international institutions, the event aims to foster collaboration and spark new thinking on how to navigate AI’s transformative impact on education with responsibility and purpose.

Prof. Eran Segal, Chair of MBZUAI’s Computational Biology Department will host a symposium with internationally renowned researchers about the future of Computational Biology and how these align with global public health priorities. The symposium will cover diverse areas, including Personalized Medicine, Foundation AI Models for Biology, Multi-omics and Integrative Data Analysis, Population-level Cohort and EHR Analysis, Epidemiology, and Biomedical Imaging.

Images of the event will be available soon.

MBZUAI is excited to participate in ICCV 2025, one of the world’s leading conferences in computer vision research. We’ll be hosting a special reception during the event and look forward to connecting with fellow researchers, collaborators, and vision enthusiasts from around the globe.

Images of the event will be available soon.

Images of the event will be available soon.

Following the success of the Dagstuhl Seminar in 2024, this focused workshop on “Rethinking the Role of Bayesianism in the Age of Modern AI” will take place from October 27 to 31, 2025. The gathering will bring together researchers exploring the frontiers of Bayesian Machine Learning and Deep Learning in a collaborative atmosphere.

Despite the recent success of large-scale deep learning, these systems still fall short in terms of their reliability and trustworthiness. They often lack the ability to estimate their own uncertainty in a calibrated way, encode meaningful prior knowledge, avoid catastrophic failures, and reason about their environments to avoid such failures.

Bayesian deep learning (BDL) has harbored the promise of achieving these desiderata by combining the statistical foundations of Bayesian inference with the practically successful engineering solutions of deep learning methods. However, compared to its promise, BDL methods often do not live up to expectations in terms of real-world impact.

This workshop aims to rethink and redefine the promises and challenges of Bayesian approaches; elucidate which Bayesian methods might prevail against their non-Bayesian competitors; and identify key application areas where Bayes can shine. The event is planned as a small, discussion-driven gathering with a relaxed and collaborative atmosphere, and is designed to encourage deep exchange, new ideas, and informal collaboration across intersecting areas of research.

Images of the event will be available soon.

Bringing Learning, Vision, Language and Robotics together

In the post-GPT world, physical intelligence represents the next frontier in AI, enabling systems and agents to sense, act, and learn within uncertain, dynamic environments.

This invitation-only symposium brings together leading researchers and practitioners in AI,Computer Vision, Natural Language, and Robotics to explore progress, challenges, and future

directions in embodied intelligence, spanning topics such as world models, sensor fusion, fast vs slow thinking, simulation, explicit vs latent representations, and modular vs end-to-end

approaches.

Images of the event will be available soon.

This workshop is organized by the Department of Machine Learning, Computational Biology, and Statistics and Data Science at MBZUAI, in collaboration with the Department of Biostatistics at the Harvard T.H. Chan School of Public Health. It aims to bring together researchers from diverse disciplines who are interested in statistical analysis, machine learning, and their applications to real-world problems in biology.

The event will serve as a platform to share state-of-the-art methods, exchange perspectives, foster interdisciplinary collaboration, and identify key challenges and opportunities at the intersection of machine learning, statistical data science, and biological research.

Images of the event will be available soon.

The MBZUAI Winter School is part of the wider MICCAI 2026 initiatives, which will bring the global medical imaging community to Abu Dhabi. This program aims to increase research outcomes from the MENA region by creating opportunities for young researchers to learn from international experts and collaborate with peers. By welcoming students from low- and middle-income countries, the school supports capacity building and strengthens the region’s contribution to the global research landscape.

At the same time, the winter school will enhance MBZUAI’s visibility as a hub for advanced AI and healthcare research. It will attract faculty and students from around the world to engage with the university and encourage academic partnerships. Linked with the RISE community in the MICCAI society, the initiative reflects a strong commitment to supporting scientific excellence and ensuring that the MENA region plays an active role in shaping future research directions.

Images of the event will be available soon.

This symposium on the Future of Human-Centered AI (HCAI) aims to proactively establish new research paradigms, moving interaction design from static tools to collaborative, intelligent partnerships. We will define the ethical and technical interventions—from Neural Interfaces to Explainable AI—required to achieve positive human outcomes. The immediate impact will be actionable reports, new research partnerships, and curriculum development, ensuring HCAI leads the responsible development of technology across critical domains like Healthcare and Education.

MBZUAI’s Department of Statistics and Data Science will host a workshop on the Frontiers of Statistical Inference, bringing together researchers to explore cutting-edge methods for reliable AI. The workshop is designed to encourage deep discussion, idea exchange, and informal collaboration across intersecting areas of statistics and machine learning.

The workshop will cover a broad set of topics at the intersection of statistics and AI, including:

Graduate students and early career researchers are especially encouraged to apply. This is a unique opportunity to engage with leaders in the field and discover future research directions.

Images of the event will be available soon.

MBZUAI is excited to participate in NeurIPS 2025, one of the world’s premier conferences in machine learning and artificial intelligence. We’ll be hosting a special reception during the event and look forward to connecting with fellow researchers, collaborators, and AI enthusiasts from around the globe.

Images of the event will be available soon.

Humanoid robotics is rapidly advancing from laboratory prototypes to real-world systems capable of perceiving, reasoning, and acting in both human-centered environments and hazardous scenarios. The second Abu Dhabi AI-Robotics Conference convenes researchers, clinicians, industry leaders, and policymakers to chart this transition with a clear focus on real deployments and measurable impact. We will examine advances in whole-body control, dexterous manipulation, and locomotion; the integration of foundation models and multimodal perception into robust visuomotor policies; and the formal methods, safety engineering, and evaluation protocols required for trustworthy operation around people. Case studies from manufacturing, logistics, and critical infrastructure will be paired with service and healthcare demonstrations—covering assistive care, rehabilitation, and hospital operations—to surface what truly generalizes, what fails in practice, and what standards, data, and hardware are still missing. By bringing together technical depth and application reality, the conference aims to accelerate a new generation of humanoids that are safe, certifiable, and economically viable, while reflecting regional priorities and global needs.

MBZUAI is delighted to participate in the 2025 International Conference on Statistics and Data Science (ICSDS), a key gathering for researchers advancing the frontiers of statistics, data science, and their applications. We’ll be hosting a special reception during the conference and look forward to engaging with the community, sharing insights, and building new collaborations.

This symposium will bring together leading researchers from around the world to share the latest advances in natural language and speech processing, explore new directions in multimodal and multilingual AI systems, and reflect on the persisting challenges and societal implications of language technologies. The discussion aims to foster scientifically-grounded and responsible development of AI systems that are accessible, inclusive, trustworthy, and human-centric.

The Organizing Committee of the Abu Dhabi Edge AI Summit, An MBZUAI and NSF Athena AI Institute Event, welcomes you to join us on February 2-4, 2026 at the Mohamed bin Zayed University of Artificial Intelligence. The Summit aims to foster collaboration between the Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) and the U.S. National Science Foundation AI Institute for Edge Computing Leveraging Next Generation Networks (Athena), with a focus on advancing research, entrepreneurship, and opportunities in the rapidly evolving field of Edge AI. Key players in the industry will share their insights on the development, societal impact, and future opportunities of Edge AI technologies. The Summit will bring together about 150 invited leading scholars, CTOs and executives from global technology companies, regional investors, Athena PIs, and prominent AI researchers from the region for forward-looking discussions on the future of Edge AI.

Following the transformative impact of the Human Genome Project, the next frontier in advancing human health lies in systematically understanding phenotypes—the complex manifestations of genetics, environment, and lifestyle over time.

Around the world, large-scale deep-phenotype, prospective longitudinal cohorts and biobanks are being established to meet this challenge. Among them, the Human Phenotype Project (HPP) is a leading example, with over 30,000 participants and comprehensive longitudinal profiling spanning genetics, multiomic measures, imaging, continuous monitoring, and extensive clinical and lifestyle data.

This inaugural annual event will convene international leaders in AI, biomedical science, and global health to explore how multimodal AI and deep phenotyping can accelerate breakthroughs from population cohorts to personalised medicine.

Images of the event will be available soon.

The MBZUAI Machine Learning Winter School: Representation Learning & GenAI is an intensive 5-day program that brings together world-leading researchers to explore the cutting-edge developments in modern machine learning and generative artificial intelligence. This comprehensive program combines keynote presentations, technical lectures, and hands-on practical sessions, offering participants direct access to the latest research and methodologies from pioneers who are shaping the future of AI.

The winter school focuses on the revolutionary advances in deep learning that have enabled powerful generative capabilities across multiple domains. From large language models to diffusion models for images and videos, these breakthrough techniques are transforming how we approach AI research and applications.

Images of the event will be available soon.

Symposium on Security in The Age of AI

The rapid development of advanced AI technologies is reshaping security research and practice. Powerful machine learning models support complex analysis of large scale and diverse types of data, which is key to addressing some of the most challenging cyber security problems. Large language models make it possible to effectively process, summarize, discover and disseminate cyber threat intelligence. AI is increasingly integrated into software development, assisting with code generation, automated repair and security-focused code reviews, helping developers avoid introducing vulnerabilities in the first place.

The widespread adoption of AI, meanwhile, introduces new security and privacy risks. Attackers manipulate model outputs through carefully crafted inputs, undermining the reliability of AI-driven systems. The collection and use of massive training data amplifies privacy concerns. Accessible generative models empower malicious users to automate social engineering, develop polymorphic malware, and scale their operations in ways that were previously not feasible.

The MBZUAI Symposium on Security in the Age of AI brings together researchers to explore these opportunities and challenges. The symposium will feature invited talks and technical discussions that span a broad set of topics, including those outlined above. Our goal is to foster a vibrant exchange of ideas and explore new research directions that strengthen security in an era shaped by transformative AI technologies.

Machine learning is becoming a central driver of progress across science and engineering—powering new capabilities in language and vision, enabling efficient discovery, and raising fresh questions about reliability, alignment, and responsible deployment. As the field moves quickly, breakthroughs increasingly come from building collaborations and knowledge sharing that enables to connect ideas across domains.

Mohamed bin Zayed University of Artificial Intelligence (MBZUAI) is pleased to host the ML Scholars Workshop in Abu Dhabi, convening an invited group of researchers and emerging scholars working at the forefront of machine learning. The workshop is designed as a gathering that prioritizes active exchange, and collaboration—with ample space for candid Q&A, idea refinement, and new research connections.

Join us for this exclusive workshop bringing together leading machine learning scholars at MBZUAI in Abu Dhabi. Explore cutting-edge research, foster collaborations, and engage with the ML community.

Participants can look forward to:

Images of the event will be available soon.

The MBZUAI European AI Forum is an opportunity to bring together like-minded AI specialists to meet and discuss the future of AI. As AI driven solutions are increasingly addressing global grand challenges, the world’s first Artificial Intelligence university, MBZUAI has been taking a leading role in developing research and education programs in a range of AI-related areas. Founded just five years ago MBZUAI is home to an international faculty comprising more than 120 renowned scientists and over 650 students and is already ranked in the world’s top 10 for our AI fields (CSRankings).

The Forum, expected to host specialists from a range of institutions across Europe will feature panel discussions focusing on:

Foundation models beyond scale

Mathematical challenges in machine learning

Human-centered embodied AI

As AI driven solutions are increasingly addressing critical global priorities, events such as the MBZUAI European AI Forum facilitate the open exchange of views in a relaxed setting leading to constructive discussion, new ideas, and the occasional controversy!

Tim Baldwin

Eric Moulines (Forum Chair)

Ian Reid

Preslav Nakov

Yoshihiko Nakamura

Mladen Kolar

Elizabeth Churchill

(Organising Committee)

Dean of the Biological and Life Sciences Division, and Professor of Computational Biology

MBZUAI’s Department of Statistics and Data Science will host a focused workshop on Time, Space, and Shape (April 14 to 17, 2026) at Mohamed bin Zayed University of Artificial Intelligence in Abu Dhabi, UAE. The workshop will bring together researchers working on time series, spatio-temporal processes, and functional and shape data analysis to discuss emerging directions in modern statistical methodology and AI. Designed as a small, discussion-driven meeting of around 30 participants, the workshop will emphasize interaction, open exchange of ideas, and informal collaboration across neighboring communities.

The workshop will cover a broad set of topics centered on temporal, spatial, and geometric structure, including:

Graduate students and early career researchers are especially encouraged to participate. This is a unique opportunity to engage with leading experts in the field and help shape future research directions.

Agentic AI is quickly moving from “chat” to action: systems that can plan, use tools, browse the web, write and run code, and even operate a computer interface. As agents take on more automation, the security stakes rise; because failures are no longer just incorrect text outputs, but real actions that can trigger data exposure, unsafe tool calls, unauthorized changes, or downstream harm.

This symposium brings together leading researchers and practitioners working on LLM/agent security, privacy, and trustworthy AI to develop a clearer threat landscape and identify practical defenses for real deployments (including prompt/indirect injection risks, poisoning, misuse, and end-to-end agent pipeline vulnerabilities).

The program features keynotes, invited talks, and a dedicated panel on securing tool-use and computer-use agents, followed by structured discussion to align on evaluation approaches, mitigation strategies, and shared benchmarks.

Deployment best practices, governance, and accountability for agentic AI systems

This workshop, Statistical Foundations of AI, will take place May 7–10, 2026, bringing together researchers at the intersection of statistics and artificial intelligence for an energetic and collaborative exchange of ideas. AI is rapidly reshaping scientific discovery, industry, and public life, yet the speed of progress can obscure the statistical principles that enable these methods—and, just as importantly, the mechanisms behind their failures. Despite striking successes, today’s AI systems can be difficult to validate, brittle in deployment, and poorly calibrated about what they do and do not know, raising pressing questions for high-stakes use. The workshop will highlight principled ways statistical thinking—modeling, inference, uncertainty quantification, and theory—can deepen our understanding of modern AI, emphasizing frameworks that deliver conceptual clarity, practical diagnostics, and, where possible, meaningful guarantees that place AI’s capabilities on firmer scientific footing. Topics of interest include statistics for trustworthy AI (reliability, robustness, invariance, interpretability, calibration, and evaluation), uncertainty quantification (predictive uncertainty, Bayesian and frequentist perspectives, and decision-making under uncertainty), the mathematics of modern deep learning architectures including transformers, transformer-next models, the mathematics of pretraining and post-training/alignment, (discrete) diffusion models, and formal characterizations of chain-of-thought reasoning and other emergent behaviors.

Seville, Spain

Seville, Spain  Hangzhou, China

Hangzhou, China  Sharm El Sheikh, Egypt

Sharm El Sheikh, Egypt  Vienna, Austria

Vienna, Austria  United Kingdom

United Kingdom  Vancouver, Canada

Vancouver, Canada

Our world-renowned faculty at MBZUAI are frequently invited to prestigious events worldwide to share their insights on the cutting edge of AI. Below, you’ll find some of our upcoming and more recent talks at esteemed institutions such as Harvard University, Stanford University, and more.

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Toward Public and Reproducible Foundation Models Beyond Lingual Intelligence | ODSC East 2025 | May 13 – 15, 2025 | More Details |

| Toward AI-Driven Digital Organisms | Biomedical Science and AI | April 30 – May 3, 2025 | |

| Toward Next Generation AI Systems Beyond Lingual Intelligence | Stanford University | March 10, 2025 | More Details |

| AI for Accelerating Invention | Princeton University | March 3, 2025 | More Details |

| AI, Science and Society | IP Paris | February 6 – 7, 2025 | More Details |

| Toward General and Purposeful Reasoning in Real World Beyond Lingual Intelligence | Columbia Engineering Lecture Series in AI | April 29, 2025 | More Details |

| Toward General and Purposeful Reasoning in Real World Beyond Lingual Intelligence | Open Data Science Conference (ODSC) | May 14, 2025 | More Details |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Keynote Talk: The Long and Winding Road of NLP | 30th Anniversary Symposium of the Association for Natural Language Processing | October 19, 2024 | More Details |

| Safe, Open, Locally-aligned Language Models | Ho Chi Minh City University of Technology | December 18, 2024 | |

| Fact-checking Language Models: Generating the Truth, the Whole Truth, and Nothing but the Truth | Ho Chi Minh City University of Technology | December 17, 2024 | |

| Fact-checking Language Models: Generating the Truth, the Whole Truth, and Nothing but the Truth | Hanoi University of Science and Technology | December 17, 2024 | More Details |

| Safe, Open, Locally-aligned Language Models | VinAI | December 16, 2024 | More Details |

| Keynote Talk: Safe, Open, Locally-aligned Language Models | 13th International Symposium on ICT | December 14, 2024 | More Details |

| Safe, Open, Locally-aligned Language Models | George Mason University | December 2, 2024 | |

| Safe, Open, Locally-aligned Language Models | University of Maryland | December 2, 2024 |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Research in Industry and Academic Contexts | ARPPID 2025 | February 1, 2025 | More Details |

| Panel Discussion | Future Health Summit | January 30, 2025 | More Details |

| AI for All: Unlocking an Inclusive Future with Technology | Special Olympics Global Center Summit | December 11, 2024 | More Details |

| Technology Talks Austria 2024 | Human Centered Transformation | September 12 – 13, 2024 | More Details |

| Keynote Speaker, Panelist | OZCHI, Sydney, Australia | November 29 – December 3, 2025 | More Details |

| Keynote Speaker, Panelist | EAT ArtsIT 2025 | November 7 – 9, 2025 | More Details |

| Featured Speaker and Moderator | Art X AI: Shaping Cultural Futures Assembly | November 15, 2025 | More Details |

| Panelist: The Future of Art Without AI: Is It Possible? | Manar Abu Dhabi 2025 | November 16, 2025 |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Uncertainty, Asymmetry of Information, and Statistical Contract Theory | Bruno De Finetti, International Society for Bayesian Analysis, Venice, Italy | July 4, 2024 | More Details |

| Prediction-Powered Inference | Joint ISBA-Fusion 2024 Workshop, Venice | July 8, 2024 | More Details |

| Contracts, Uncertainty, and Incentives in Statistical Decision-Making | North American Economics and AI+ML Meeting of the Econometric Society, Ithaca, NY | August 14, 2024 | More Details |

| A Collectivist View on AI: Collaborative Learning, Statistical Incentives, and Social Welfare | AI-ML Systems Conference, Baton Rouge, LA | October 8, 2024 | More Details |

| Contracts, Uncertainty, and Incentives in Decentralized Machine Learning | NETGCOOP 2024 Conference, Lille, France | October 10, 2024 | |

| A Collectivist Vision of AI: Collaborative Learning, Statistical Incentives, and Social Welfare | RECSYS Conference, Bari, Italy | October 16, 2024 | More Details |

| Keynote Speaker | CCNC Conference, Shanghai, China | October 28, 2024 | |

| Invited Speaker | Harvard Statistics Colloquium, Cambridge, MA | ||

| Invited Speaker | Vannevar Bush Faculty Fellowship Summit | June 11, 2024 | |

| Keynote Speaker | AI, Science, and Society, Paris, France | February 6, 2025 | More Details |

| An Alternative View on AI: Collaborative Learning, Incentives, and Social Welfare | Next Generation AI and Economic Applications, Morocco | February 24, 2025 | More Details |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Fundamental Research on Computation and Control of Humanoid Robot Motions | Japan Academy Prize | June 10, 2025 | More Details |

| Panelist in "Next Generation Mechanisms" | International Symposium of Robotics Research, Long Beach, CA, USA | December 12, 2024 | More Details |

| Embodied AI from Human Motion Data | The ShanghAI Lecture 2024 | November 28, 2024 | |

| Panelist in "Panel on Robotics Education and Culture" | Inauguration of Stanford Robotics Center, Stanford, CA, USA | November 2, 2024 | More Details |

| "Embodiment of AI and Biomechanics/Neuroscience" | IEEE-RSJ IROS, Abu Dhabi | October 17, 2024 | More Details |

| Will Humanoid and AI Show a Vista of the Intelligence? | IEEE ICRA@40, Rotterdam, Netherlands | September 24, 2024 | More Details |

| Distance between Language and Action | IEEE-RAS ICRA, Yokohama, Japan | May 17, 2024 | More Details |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Towards Truly Open, Language-Specific, Safe, Factual, and Specialized Large Language Models | University of Illinois Urbana-Champaign | May 5, 2025 | More Details |

| Towards Truly Open, Safe, and Factual Large Language Models | MIT (USA) | June 2, 2025 | |

| Factuality Challenges in the Era of Large Language Models | University of Massachusetts Amherst (UMass Amherst) (USA) | June 30, 2025 | More Details |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | European Lab for Learning & Intelligent Systems (ELLIS) workshop (Manchester, UK) | June 16, 2025 | More Details |

| Factuality Challenges in the Era of Large Language Models | University of California at Berkeley | October 15, 2025 | |

| Towards Truly Open, Safe, and Factual Large Language Models | Nanyang Technological University | April 25, 2025 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | TU Darmstadt | March 13, 2025 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | IIT Gandhinagar | March 21, 2025 | |

| Towards Truly Open, Language-Specific, Safe, Factual, and Specialized Large Language Models | Sofia University | November 27, 2025 | More Details |

| Factuality Challenges in the Era of Large Language Models | University of California at Berkeley | October 15, 2025 | |

| Factuality Challenges in the Era of Large Language Models | University of Massachusetts Amherst | June 3, 2025 | |

| Towards Truly Open, Safe, and Factual Large Language Models | Massachusetts Institute of Technology | June 2, 2025 | |

| owards Truly Open, Safe, and Factual Large Language Models | University of Illinois at Urbana-Champaign | May 5, 2025 | |

| Towards Truly Open, Safe, and Factual Large Language Models | Nanyang Technological University | April 25, 2025 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | IIT Gandhinagar | March 21, 2025 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | TU Darmstadt | March 13, 2025 | |

| Towards Truly Open, Safe, and Factual Large Language Models | Sofia University | November 30, 2024 | |

| Towards Truly Open, Safe, and Factual Large Language Models | GATE Institute 5-year Conference | November 20, 2024 | More Details |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | University of Montpellier | November 18, 2024 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | CentAI Institute | May 21, 2024 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | ISI Foundation | May 21, 2024 | |

| Factuality Challenges in the Era of Large Language Models: Can we Keep LLMs Safe and Factual? | National University of Singapore | May 16, 2024 | |

| Factuality Challenges in the Era of Large Language Models | Ecole Polytechnique | April 2, 2024 | |

| Factuality Challenges in the Era of Large Language Models | Athens University of Economics and Business | March 8, 2024 | |

| Jais and Jais-chat: Building the World's Best Open Arabic-Centric Foundation and Instruction-Tuned Open Generative Large Language Models | ILSP & Archimedes NLP Theme Talk | March 7, 2024 | |

| Factuality Challenges in the Era of Large Language Models | Sabanci University | March 6, 2024 | |

| Towards Truly Open, Language-Specific, Safe, Factual, and Specialized Large Language Models | RADH'2025 | November 27 – 28, 2025 | More Details |

| Towards Truly Open, Safe, and Factual Large Language Models | Summit 333 | November 9 – 11, 2025 | More Details |

| Towards Truly Open, Safe, Factual and Specialized, Large Language Models | SREM'2025 | October 22 – 24, 2025 | More Details |

| Towards Truly Open, Language-Specific, Safe, Factual, and Specialized Large Language Models | RANLP'2025 | September 8 – 10, 2025 | More Details |

| Towards Truly Open, Language-Specific, Safe, Factual, and Specialized Large Language Models | AthNLP'2025 | September 4, 2025 |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Visual Intelligence | AI Elevate Conference | December 13, 2025 |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Personalized Medicine Based on Deep Human Phenotyping | World Immune Regulation Meeting | March 12 – 15, 2025 | More Details |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Keynote Speaker | CoNLL 2024 | November 16, 2024 | More Details |

| Invited Speaker | KHIPU 2025 | March 13, 2025 | More Details |

| Towards Socially Intelligent Multimodal Artificial AgentsAgent | Pre-ACL 2025 Workshop | July 26, 2025 | More Details |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| A System of Multiscale Foundation Models for Biology | AAAI 2025 | March 3, 2025 | More Details |

| Towards AI-Driven Digital Organism | AI + Biomedicine Seminar | February 18, 2025 | More Details |

| Talk Title | Event/Venue | Date | More Info |

|---|---|---|---|

| Causal representation learning: Uncovering the hidden world | University of Pennsylvania, Philadelphia, PA | September 16 – 17, 2024 | More Details |

| Learning hidden variables and causal relations | UC Davis | November 7 – 8, 2024 | More Details |

| Causal representation learning and its connection with decision making | Ohio State University | November 8, 2024 | More Details |

| Learning identifiable concepts for image generation and editing | Johns Hopkins University | November 9 – 10, 2024 | More Details |

| Revealing the Revealing the Hidden Causal World | Carnegie Mellon University | November 22, 2024 | More Details |

Stay at the forefront of AI innovation with the latest findings and news from MBZUAI's research community.

MBZUAI is a global center of excellence dedicated to advancing artificial intelligence research, fostering interdisciplinary collaboration, and nurturing the next generation of AI pioneers.

Whether you are an esteemed faculty member, an ambitious student, or an AI professional seeking to drive impact, MBZUAI provides a unique environment where groundbreaking ideas thrive, and meaningful innovation takes shape.

At the core of MBZUAI lies a commitment to transform knowledge into a force for positive change. Together we are shaping a future where education uplifts individuals and benefits communities worldwide.

Join MBZUAI’s distinguished AI faculty and contribute to globally recognized research initiatives.

Sign up to make sure you don't miss out on regular updates to the MBZUAI Nexus events calendar.

If you would like to propose a research talk for the MBZUAI Nexus, submit your proposal below. The MBZUAI Nexus team will be in touch.